返回> 网站首页

CUDA 多个独立任务并行执行 - 示例

![]() yoours2024-04-19 16:44:06

yoours2024-04-19 16:44:06

简介一边听听音乐,一边写写文章。

一、多线程并行执行CUDA核函数

示例创建了两个核函数,并且在多线程中并行调用,在jetson上测试正常。

二、示例

#include <stdio.h>

#include <stdlib.h>

#include <cuda_runtime.h>

#include <pthread.h>

#define NUM_THREADS 10

#define NUM_ELEMENTS 3

// 内核函数,它将数组中的每个元素乘以一个常数

__global__ void multiplyKernel(float *data, float constant) {

int idx = threadIdx.x + blockIdx.x * blockDim.x;

if (idx < NUM_ELEMENTS) {

data[idx] *= constant;

}

}

__global__ void multiplyKernel2(float *data, float constant) {

int idx = threadIdx.x + blockIdx.x * blockDim.x;

if (idx < NUM_ELEMENTS) {

data[idx] *= (constant*100);

}

}

bool bOK = true;

// 线程函数,每个线程将运行在自己的CUDA流中

void* threadFunction(void* arg) {

int threadId = *(int*)arg;

cudaStream_t stream;

cudaError_t err;

// 为每个线程创建一个新的CUDA流

err = cudaStreamCreate(&stream);

if (err != cudaSuccess) {

printf("Error creating stream in thread %d: %s\n", threadId, cudaGetErrorString(err));

return NULL;

}

// 分配设备内存

float *devData;

err = cudaMallocAsync(&devData, NUM_ELEMENTS * sizeof(float), stream);

if (err != cudaSuccess) {

printf("Error allocating memory in thread %d: %s\n", threadId, cudaGetErrorString(err));

cudaStreamDestroy(stream);

return NULL;

}

// 初始化设备内存(这只是一个示例,实际数据可能来自其他地方)

float hostData[NUM_ELEMENTS];

for (int i = 0; i < NUM_ELEMENTS; ++i) {

hostData[i] = 1.0f;

}

err = cudaMemcpyAsync(devData, hostData, NUM_ELEMENTS * sizeof(float), cudaMemcpyHostToDevice, stream);

if (err != cudaSuccess) {

printf("Error copying data to device in thread %d: %s\n", threadId, cudaGetErrorString(err));

cudaFree(devData);

cudaStreamDestroy(stream);

return NULL;

}

// 在流中启动内核

float constant = (float)threadId; // 每个线程使用不同的常数

if(bOK)

{

bOK = false;

multiplyKernel2<<<1, 256, 0, stream>>>(devData, constant);

}else{

multiplyKernel<<<1, 256, 0, stream>>>(devData, constant);

}

// 等待内核完成(这只是一个示例,通常你可能不会在每个线程中同步)

err = cudaStreamSynchronize(stream);

if (err != cudaSuccess) {

printf("Error synchronizing stream in thread %d: %s\n", threadId, cudaGetErrorString(err));

}

err = cudaMemcpyAsync(hostData, devData, NUM_ELEMENTS * sizeof(float), cudaMemcpyDeviceToHost, stream);

if (err != cudaSuccess) {

printf("Error copying data to device in thread %d: %s\n", threadId, cudaGetErrorString(err));

cudaFree(devData);

cudaStreamDestroy(stream);

return NULL;

}

for (int i = 0; i < NUM_ELEMENTS; ++i)

{

printf("%f \n", hostData[i]);

}

// 释放资源

cudaFree(devData);

cudaStreamDestroy(stream);

return NULL;

}

int main() {

pthread_t threads[NUM_THREADS];

int threadIds[NUM_THREADS];

// 创建线程

for (int i = 0; i < NUM_THREADS; ++i) {

threadIds[i] = i;

pthread_create(&threads[i], NULL, threadFunction, (void*)&threadIds[i]);

}

// 等待所有线程完成

for (int i = 0; i < NUM_THREADS; ++i) {

pthread_join(threads[i], NULL);

}

return 0;

}

编译:

nvcc --cudart shared -o k3 k3.cu -std=c++11 -lpthread

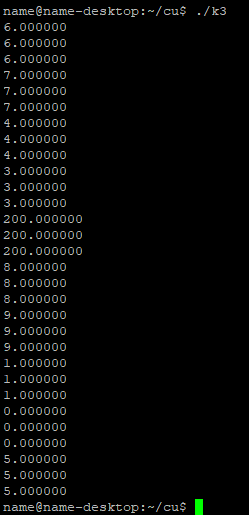

执行结果,如图: